Back in the day, we learned in statistics that you need a sample size of at least 2% of the size of population to make statistically significant inferences about the population. In common speak, the expression “statistically significant” means “valid”.

Nevertheless, if you’re like me, you regularly come across surveys conducted on sample size of 2000 that make inferences on populations of hundreds of millions of members. Given below are examples of such surveys that use sample sizes that are way below 2% of the population:

EXAMPLE 1

British consumers don't understand what open banking is any more than Americans do, even though in theory they've had it for a year https://t.co/p3GOSwe28s #OpenBanking #datasharing #API @AmerBanker @RFIGroup_

— Penny Crosman (@pennycrosman) December 19, 2018

EXAMPLE 2

Not even 2K…

France, Ifop poll:

President Macron Approval Rating

Approve: 27% (+4)

Disapprove: 72% (-4)Field work: 11/01/19 – 19/01/19

Sample size: 1,928— Europe Elects (@EuropeElects) January 20, 2019

EXAMPLE 3

An Amazon Checking Account Could Displace $100 Billion In Bank Deposits (But It Won’t)

EXAMPLE 4

Most Americans foresee death of cash in their lifetimes

EXAMPLE 5

For reference, the population of Great Britain is ~60 million and USA is ~300 million.

The sample sizes in these studies work out to 0.0033 to 0.00066 percent of the respective population.

Since they’re well short of our 2% bar, should we debunk the findings of these studies?

At one point, I thought yes.

But, now, I’m not so sure. Many of these studies have been published by well-reputed media outlets and can’t be dismissed so easily.

So, I decided to probe the topic further.

I came across this online sample size calculator, which says a sample size of 1006 yields a 95% confidence value of results with 3% error margin for a population of 300 million.

Not convinced with the above, I found a formula to calculate error margin here. When I plugged in the values, I found the results tallying with the above.

I was also intrigued by the following line on the sample size calculator website:

“The sample size doesn’t change much for populations larger than 20,000.”

This runs totally contradictory to the standard creed “Larger the population, the larger is the size of sample required for testing.”

What gives?

I suspect it has something to do with the composition of a population.

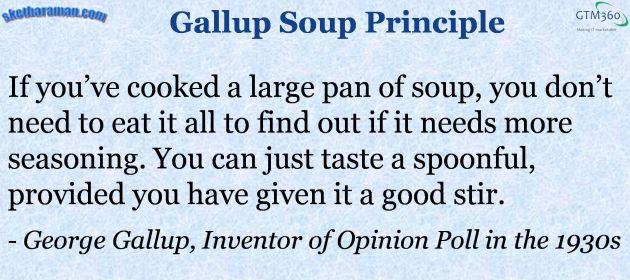

Surveys make a tacit assumption that their samples are “truly representative” of the population. To understand that concept, let’s take the following maxim that I call the Gallup Soup Principle:

Some populations are homogeneous i.e. of the same kind. For example, liquids that have been given a good stir. (Unlike James Bond’s Martinis, which are shaken, not stirred!).

Other populations are heterogeneous i.e. diverse in character. For example, the terrain of the earth (comprising plains, mountains, water bodies, etc.)

A nation tends to be homogeneous on some attributes (e.g. nationality of its residents) but heterogeneous on some others (e.g. income).

In this post,

- Homogeneous means “homogeneous by nature” and / or “becomes homogeneous after being given a good stir”.

- Heterogeneous means “heterogeneous by nature” and “does not become homogeneous even after being given a good stir”.

Unlike liquids, you can’t stir many populations, so “good stir” effectively happens by taking a random sample of those populations.

For homogeneous populations, a sample size of 2K is akin to the spoonful of soup in the Gallup Soup Principle. Accordingly, we can draw valid inferences about the whole population, however large it is, based on a survey conducted with such a small sample size.

For heterogeneous populations, a sample size of 2K is unlike the spoonful of soup. Accordingly, inferences drawn from a survey with such a small sample may not be valid for the population. But that does not stop people from conducting such surveys, which explains why results of so many studies are misleading and / or contradictory to one another.

Misleading results

- Indians don’t speak Hindi (Survey of 2000 Indians in Tamil Nadu, a southern state of India in which Tamil is the local language)

- America has no mountains (Survey of the terrain of 2000 square miles of Kansas, a state in USA that has only plains)

- 95% banks have innovation labs (Survey of 100% of banks who have innovation labs)

Contradictory results

- Cash is dead v. Cash in circulation is growing

- Branch is dead v. Banks are opening new branches

- Omnichannel shopping is BS v. Book Online & Collect At Store is the future of retail.

Such disingenuous surveys provide the underpinning for Shevlin’s Law.

Shevlin’s Law: "For every data point that proves one point of view, there are two that refute it." ~ https://t.co/VysoVR1vEp via @rshevlin .

Also see https://t.co/jMryL4E6F9 . #Statistics #Lying #BigData #Quantipulation

— Ketharaman Swaminathan (@s_ketharaman) June 11, 2020

A survey with a sample size of merely 2000 can deliver statistically significant results for a population of millions provided the sample is representative of the population, which in turn depends on how homogeneous or heterogeneous the population is.

But there’s no quantitative measure of a population’s composition, so it’s not possible to rigorously prove that a sample is representative of the population.