According to the old adage, “something is better than nothing”.

This has become common wisdom because it’s often true.

But there are a few critical situations where it’s fatally flawed. In this post, I’ll give a few examples.

#1. CACHE MEMORY

Back in the day, the IT hardware company I worked for launched two models of minicomputers. The higher end model had 64KB of cache memory and the lower end model had no cache memory.

You can guess how far “back in the day” this goes from the KB attached to the above numbers but please bear with me because this is where I first got exposed to the counterintuitive theme of this post. Besides, I assure you that the context is very relevant even today because cache still works in the same way and its use has expanded manifold to browser, CDN, and many more things in modern times.

If you’re unfamiliar with the basic operations of cache, I recommend the following video.

My company’s cache sizing was done by R&D and marketing, guided by hardcore engineering principles and price-performance considerations.

A competitor tried to outflank us by launching a model with 32KB cache at the same price point as our 0KB model, with the claim that 32KB cache is superior to 0KB.

We knew the competitor’s move was a sales gimmick but, since it preyed on the common wisdom that something is better than nothing, we couldn’t ignore it, even though it was false.

Let me explain how we blew its 32KB model out of the water and proved that, contrary to popular opinion, no cache is better than some cache.

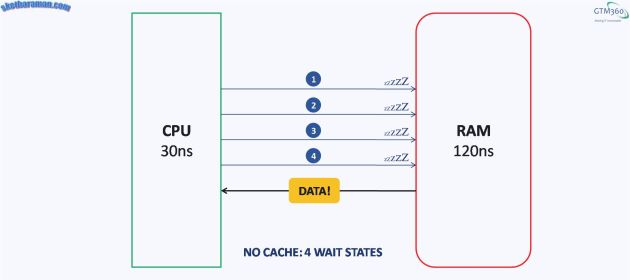

As explained in the above video, CPU fetches data from the RAM and processes it. CPU response time those days was ~30ns and RAM response time was ~120ns. Response time is the reciprocal of speed. In other words, CPU was 4X as fast as RAM. That meant that the CPU would spend four clock cycles (being 120/30) to fetch the required data from RAM. Since the CPU could do nothing else during that period, it was effectively idling or going through four “wait states”. See Exhibit 1.

It was the standard practice to improve performance by using Cache Memory to reduce the number of wait states. The strategy continues to date. In fact, multiples levels of cache are used nowadays e.g. L1, L2 and L3 cache.

RAM is dynamic memory (DRAM) and needs to be refreshed constantly whereas Cache is static memory (SRAM), which does not need to be refreshed. Ergo, cache is faster than RAM. But Cache is also much costlier than RAM.

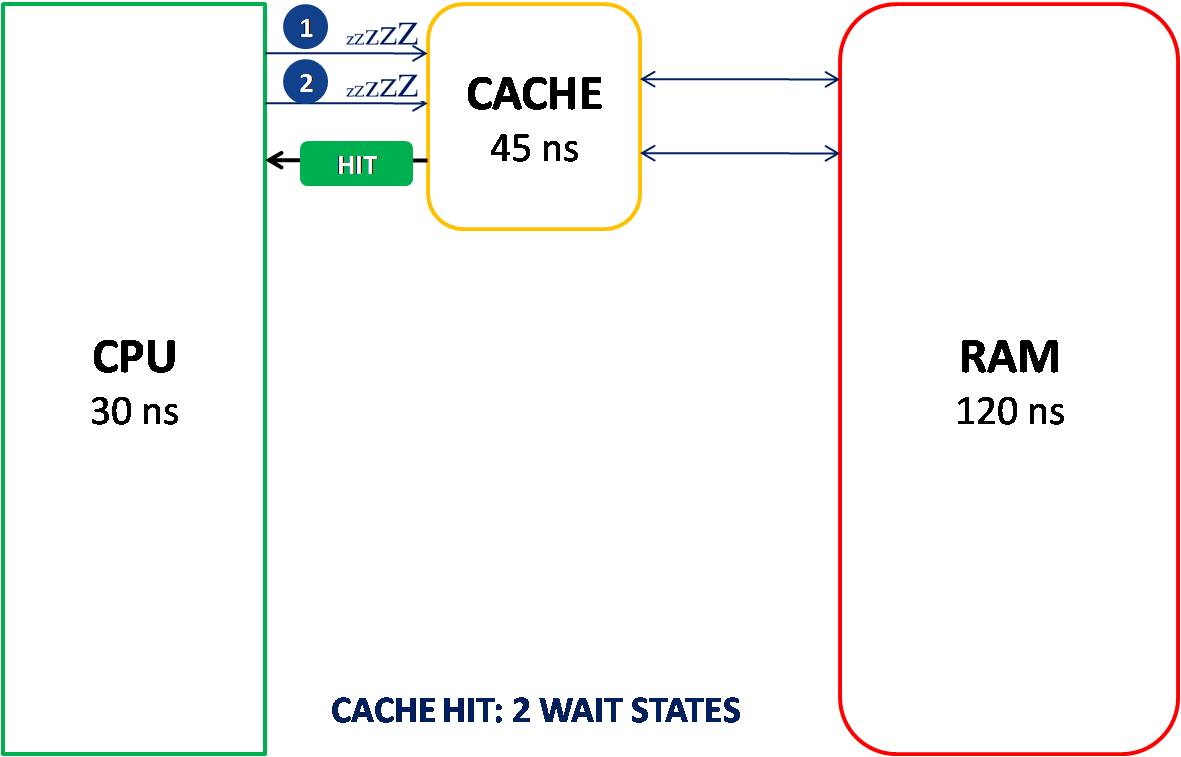

Cache had a response time of 45ns, so CPU would suffer only two wait states (being 45/30 rounded up to nearest integer) to access data from Cache.

So performance would be improved by introducing a cache between CPU and RAM, assuming that the CPU found the required data in the Cache i.e. “Cache Hit”. See Exhibit 2.

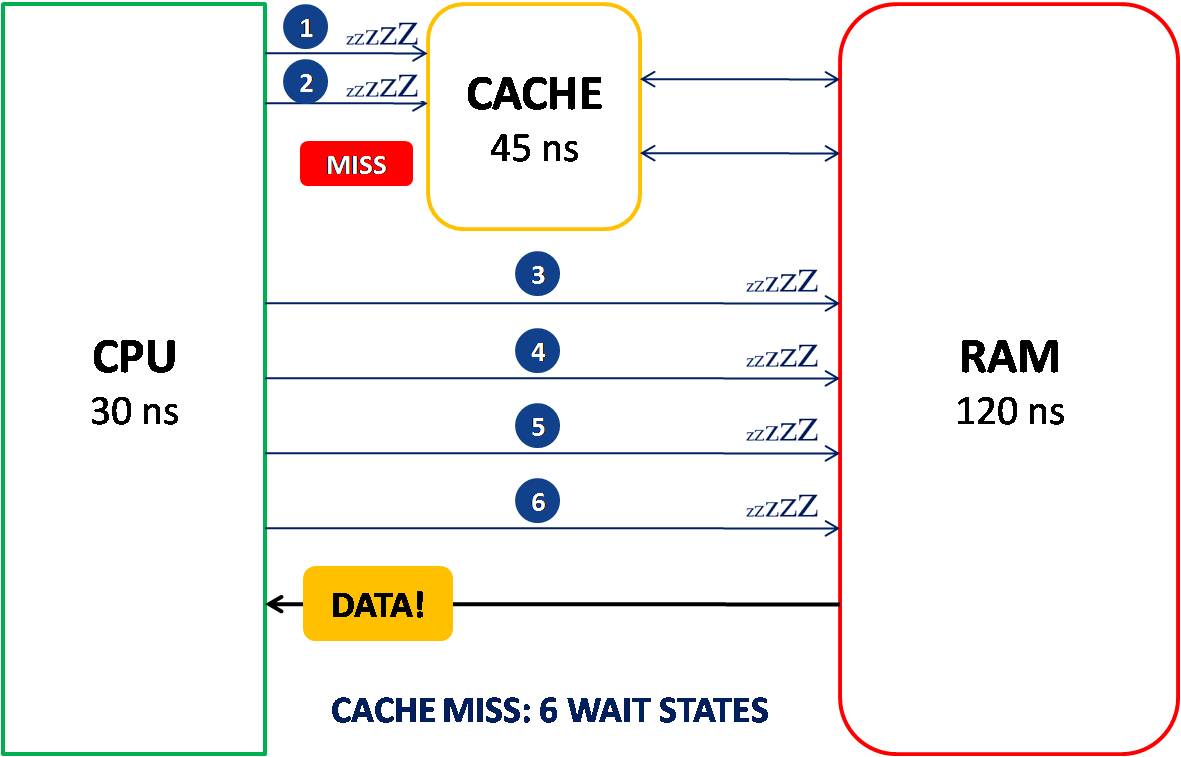

In the event of a “Cache Miss” – the CPU didn’t find the required data in the Cache – the CPU would waste two wait states in the futile attempt to fetch the data from the cache, then go through four more wait states to get it from the RAM, thus undergoing a total of six wait states. See Exhibit 3.

Ideally, all the data in the RAM would be mirrored in the cache and CPU will always have a “Cache Hit”.

But, in the real world, cache costs 3X of RAM, so this would have made the computer too uncompetitive in the market.

The key challenge to getting the performance right was to arrive at the optimum size. The cache memory had to be large enough to deliver Cache Hit but small enough to deliver competitive pricing.

Our studies had shown that ~60KB was the magic number. Above that (say 64KB), there was a 95% probability of Cache Hit, leading to two wait states. Below that (say 32KB), Cache Miss was guaranteed, leading to six wait states, which was worse than the four wait states of the “no cache” architecture where the CPU went directly to the RAM.

The results are summarized in the following table:

| Cache Size | Result | Wait States | Performance |

| 0KB | No Cache | 4 | MEDIUM |

| 32KB | Cache Miss | 6 | WORST |

| 64KB or above | Cache Hit | 2 | BEST |

It’s clear from the table that “no cache” architecture had better performance than the competitor’s 32KB cache gimmick.

No cache is better than some cache.

#2. CAPITAL FOR NEW COMPANY

The capital raised by a new business should at least cover its cash burn till Breakeven Point. Any amount below that could result in the company shutting down before achieving breakeven and whatever capital is raised until then will go down the drain. Better not to raise any capital at all. No capital is better than some capital.

This is equally true while entering a new geography. You need to make a certain minimum investment to break into mature and well-served markets e.g. Germany. If you’re not prepared to invest that amount, you shouldn’t invest a fraction of it in the hope that you will get a fraction of the returns in the short term. Because you won’t – returns don’t track investments closely in the early stages of market expansion. Whatever you invested will be lost.

As McKinsey observes, when it comes to strategic inputs, how much is as important as what.

Love how @McKinsey conveys the notion that something is not always better than nothing: "Even if a company is doing something, how much it is doing often makes a difference. Strategy is not only about directionality but also materiality." https://t.co/oqUrtYaF2y

— GTM360 (@GTM360) July 15, 2019

That’s it for now.

In a follow-on post, I’ll cover two more situations in which nothing is better than something. Watch this space!